This is it: January Jolt #31. Not a believer of numerology but…

31 is a black number on the roulette wheel that many players consider lucky perhaps because it is a prime number. Prime numbers are numbers that have only 2 factors: 1 and themselves. (Speaking of roulette, who can forget the classic movie line of Wesley Snipes: ‘Always bet on black’?)

31 has proved a lucky number for me because that was my age when my wife Marjorie accepted my proposal of marriage in 1982. And, of course, 31 signifies the original number of Baskin-Robbins flavors of which Butterscotch Ribbon was the best. (Don’t even dare to bring up Banana Nut Fudge or Coffee Candy. I mean, Coffee Candy Ice Cream?!?!)

The luck of 31 today though is that my pledge to post about testing for each of the 31 days in January is fulfilled. As promised in an earlier blog entry several days ago, there will be other posts drop with the same frequency. And it wasn’t just about making sure that something was written every day on this blog Testing: a Personal History. My perseverance had a purpose: raise awareness of what testing is really like including its significant deficiencies as currently practiced and argue that all of us should demand a different kind of educational measurement, specifically, no tests but for learning and no exams but for education. Today’s post will look at two concepts of testing critical to this change, but first we will perform merrily our Mailbox Monday observance.

One of the communications that uplifted my spirits about testing came from a much younger former colleague whose talent while extraordinary is matched by a powerful humanity who wrote, “Your posts are always insightful and well thought out. I enjoy reading them. You are right, doing my best to slowly turn the large testing ship in the right direction. It can be a challenge but, especially now, the market is calling for change.” With individuals like this person in position to be leaders in the world of educational measurement in the very near future, perhaps all of us can feel more hopeful about where testing sails next in clearing the current pell-mell of obstacles, objections, and obstructionists.

Ken Sisk, a fellow proud and grateful graduate of Saint Peter’s Prep in Jersey City wrote about the importanceof “a test I took in 8th grade that said I should become an architect or building.” Tests that help us learn about ourselves in a way that is not only valid and fair, but also motivational serve us superbly, and they also serve the wider world in which we get to contribute effectively. Thank you, Ken!

What can I say about Marianne Talbot except thank you, thank you, thank you! Marianne to me is like the friend who manages to be there shouting encouragement when you play in the old-timers basketball game and immediately wonder what’s going to happen first: pulled muscle, embarrassing airball, or fatal heart attack. Is there anything more advantageous to an author than someone paying such intelligent attention to what you are writing? I look forward to what Marianne will write about testing given her hands on experience and probing sensibility. And now to our main course today: Alphabet Soup and Irish Whiskey

Alphabet Soup

Keep Your Eye on The CBAL

ETS was the land of acronyms. Trying to understand what people were referencing in early discussions back in 2001 often led me to mutter WTF to myself. An effort led by former colleague, Felicia DeVincenzi, collected all of the acronyms into one glossary: there were over five hundred! (She and I wrote early in this century about the knowledge management advantage of such a list in a chapter of a book on Knowledge Management) Today just two will take our time, but they are the two most essential from my 6767 days associated with CBAL and ECD.

CBAL stands for Cognitively Based Assessment of, for, and as Learning. (Some leader writing on this concept changed the final preposition to ‘buy learning’, and I am just not smart enough to understand the criticality of that difference.) As one of the most brilliant researchers and charming colleagues I ever met, John Sabatini put it in a paper in 2011 written with Randy Bennett and Paul Deane: “The CBAL program intends to produce a model for a system of assessment that: documents what students have achieved (of learning); helps identify how to plan instruction (for learning); and is considered by students and teachers to be a worthwhile educational experience in and of itself (as learning).” A system of assessment. “

A system is “a set of things working together as parts of a mechanism or an interconnecting network.” The system part is critical in understanding why the argument at the end of this series of essays is ‘No Tests but for Learning’. As much as it is convenient and at times even productive human beings to split the world up into arbitrary distinctions, that’s not the way organisms work. Therefore, splitting up testing and learning is counterproductive. That’s why the three authors of the above link paper note that to achieve the goals of this kind of holistic learning, “CBAL consists of multiple, integrated components: summative assessments, formative assessments, professional development, and domain-specific cognitive competency models … Each of the CBAL components is informed by, and aligned with, domain-specific cognitive competency models (which we describe in some detail in the next section).”

A little too technical for you? Just imagine that every task that a student does is connected to a very clear model of what they are supposed to be learning at that stage of their education and, therefore, produces evidence of how that learning is proceeding for that individual. That’s CBAL.

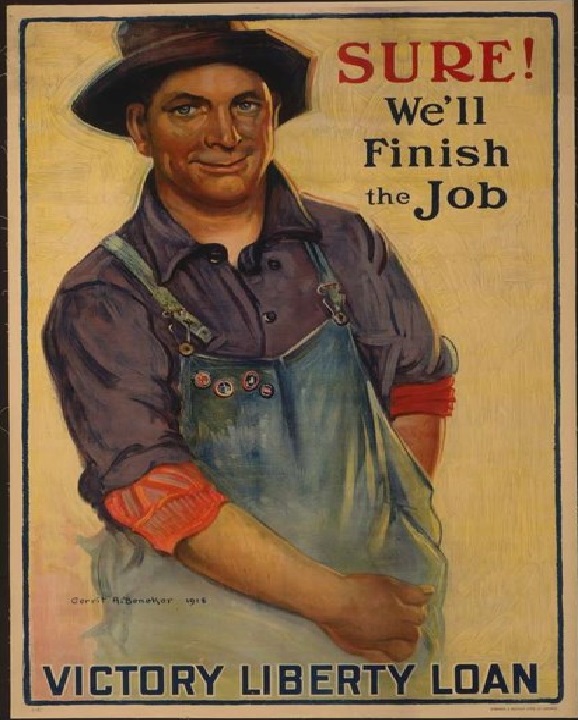

Yes, to make this happen instead of what currently goes on in classrooms this is a mammoth undertaking that would involve very different ways of teaching in order to establish these “relationships among assessments, instruction, and the cognitive competency models.” That’s why I keep using propaganda posters as the illustrations for these last January Jolts to evoke the kind of massive effort necessary. Randy Bennett and others conceived the CBAL system model as an educational intervention AND an indicator of student achievement. Many of the criticisms that testing has faced within the K-12 world from teachers, parents, and even the students themselves ooze from bisecting education into those two domains.

Ironically, the earliest pioneers of what is now called standardized testing including Carl Brigham (who as noted in an earlier post had promoted eugenic and racist opinions that he later repudiated) wanted the non profits like ETS and ACT to do testing that would help learning, that was formative. You can read about it in this paper by Randy Bennett.

Unfortunately, movement from the testing establishment to make CBAL happen is thus far stupendously insufficient. ETS’s own webpage on CBAL appears not to have been updated in years still referring to pilot tests on a concept that they have worked on since 2007. This snails pace of progress in promulgating and promoting this kind of testing is not the fault of the researchers; having spent my last two years at ETS in the role of Knowledge Broker solely within R&D, I saw that the intellectual capital necessary for this shift to occur on a large scale already exists. What’s missing is institutional oomph, which seems to be dedicated instead to operating like a business when the whole point of having nonprofit entities and governmental bodies is that they are not supposed to be businesses.

Indeed, as Bennett feared in the above linked paper, the incessant quest for profit and the paucity of imagination in testing organizations and governmental departments increasingly controlled by their finance functions becomes the reason for not doing something different, for not doing something urgently needed: switching to a philosophy of no tests but for learning; no exams but for education.

Randy Bennett himself wrote of the danger of these nonprofit organizations tilting too much to one side or the other:

“ ETS is a nonprofit educational measurement organisation with public service principles deeply rooted in its progenitors and according to the Internal Revenue Service. Both its progenitors and the framers of the tax code were interested in the same thing: providing a social benefit. It is reasonable to argue that, in the 21st century, renewed meaning can be brought to ETS’s mission by attacking big challenges in education and measurement and using assessment to increase diversity. But through the history, we can see that the purpose of a nonprofit organisation like ETS is not about making money. Its purpose is also not about making money to support its mission. Its purpose is about doing its mission and making enough money in the process to continue doing it in better and bigger ways.

If ETS succeeds in being responsive without being responsible, or in being responsible without being responsive, it will have failed its main mission. ETS, and kindred organisations must do both. Doing both is what it means to be a nonprofit educational measurement organisation in the 21st century.”

It may seem like I’m picking on my old employer and could be accused of ingratitude or sour grapes, but the same requirement applies to every other not for profit educational organization and to every governmental entity associated with education as the latter are supported by tax dollars. We need a movement to move all of them in this direction and if a group of dedicated moms could effectively scuttle the grand plans of Common Core, No Child Left Behind, and Race to the Top then they could in this instance build something with the same energy that they tore down those bureaucratic edifices.

The gargantuan ambition of this initiative dictates that we start with methods that are more accessible and possible while pushing everyone to move towards this larger goal of inculcating CBAL through out K-12 education. And that’s where ECD first articulated back in 2003 by former colleagues and current friends Bob Mislevy, Russell Almond , and Jan Lucas comes into play.

EASY ECD

If we could teach every teacher in the K-12 universe how to use the methodology known as evidence centered design or ECD, the potential differences in how they looked at lining up the tasks of instruction could prove game changing. It would supercharge the overall Assessment Literacy of these key educators. Assessment Literacy is the knowledge about how to assess what students know and can do, interpret the results of these assessments, and apply these results to improve student learning and program effectiveness. Former ETSer Rick Stiggins, in 1991, said that ‘assessment literates’ ask two key questions about all assessments of student achievement: What does this assessment tell students about the achievement outcomes we value? What is likely to be the effect of this assessment on students?

If teachers have greater assessment literacy than that divide between assessment and instruction closes if not fully disappears. Let me review both some lines from a paper about classroom assessment that was written by Christine Lyon and some former ETSers Siobhan Leahy, Marnie Thompson, and my good friend Dylan Wiliam

“There is intuitive appeal in using assessment to support instruction: assessment for learning rather than assessment of learning. We have to test our students for many reasons, and, obviously, such testing should be useful in guiding teaching. … We can use the results to make broad adjustments to curriculum, such as spending more time on a unit, reteaching it, or identifying teachers who appear to be particularly successful at teaching particular units. But if we are serious about using assessment to improve instruction, then we need more fine-grained assessments, and we need to use the information to modify instruction in real time.

What we need is a shift from quality control in learning to quality assurance. Traditional approaches to instruction and assessment involve teaching some given material, and then, at the end of teaching, working out who has and hasn’t learned it–akin to a quality control approach in manufacturing. In contrast, assessment for learning involves adjusting teaching while the learning is still taking place–a quality assurance approach. Quality assurance also involves a shift of attention from teaching to learning. The emphasis is on what the students are getting out of the process rather than on what teachers are putting into it, reminiscent of the old joke that schools are places where children go to watch teachers work.

In a classroom that uses assessment to support learning, the divide between instruction and assessment blurs.”

But getting teachers ‘assessment literate’ has proved very difficult. Plake and Impara in 1996 wrote that “It is estimated that teachers spend up to 50% of their instructional time in assessment-related activities. … It has been found that teachers receive little or no formal assessment training in the preparatory programs and often they are ill-prepared to undertake assessment-related activities.” Twenty-five years later, Carla Evans admitted that “Assessment literacy, or the lack thereof, is one key reason why attempts to establish balanced systems of assessment with sufficient scope and spread have largely stalled.” (Emphasis added) A 2017 doctoral dissertation by Mark Snyder concluded in part that “The assessment literacy scores [gained through his surveys and research] are suggestive of only partial mastery of the standards of teacher competence in educational assessment of students.” (Emphasis added AGAIN) In 2009, W. James Popham , a Professor Emeritus of education at UCLA wrote: “Many of today’s teachers know little about educational assessment. For some teachers, test is a four-letter word, both literally and figuratively. The gaping gap in teachers’ assessment-related knowledge is all too understandable. The most obvious explanation is, in this instance, the correct explanation. Regrettably, when most of today’s teachers completed their teacher-education programs, there was no requirement that they learn anything about educational assessment. For these teachers, their only exposure to the concepts and practices of educational assessment might have been a few sessions in their educational psychology classes or, perhaps, a unit in a methods class.”

In 2019, one of the last R&D Knowledge Exchange sessions facilitated by me featured my friends Cara Cahalan Laitusis and Lillian Lowery talking about ‘Student Assessment and ETS Professional Educator programs’. Cara was then Senior Research Director of the Center for Student and Teacher Research in Research and Development and Lillian spoke from her position and experience as VP & COO STA K12 & TC as well as a former Maryland State Superintendent of Schools and Delaware Secretary of Education. They both acknowledged the problem. We spoke of the need for improved assessment literacy among teachers. The admission was that much work still needed to be done despite this history of diagnoses of holes in this knowledge for those educators going back decades.

And that’s where ECD becomes vital. “ECD is a systematic way to design assessments. It focuses on the evidence (performances and other products) of competencies as the basis for constructing excellent assessment tasks.“ As Bob Mislevy wrote, “ECD provides a conceptual design framework for the elements of a coherent assessment at a level of generality that supports a broad range of assessment types – from familiar standardized tests and classroom quizzes, to coached practice systems and simulation-based assessments, to portfolios and student-tutor interaction.” We must make the knowledge and practice of ECD to be a fundamental in our K-12 world. Val Shute (who I was very sorry to see leave ETS for FSU), Yoon Jeon Kim, and Rim Razzouk wrote a brilliant little book entitled ECD for Dummies. If you have kids, reading the book yourself will prove very useful, but asking their teachers whether they understand ECD could be even more powerful. I’m grateful to Val and her co-authors because their wonderful piece saves me the trouble of further explaining ECD. You read what they have written and recognize that teachers practiced this concept learning be more effective for every student.

And that sentiment is what these 31 posts tried to explore any effect: making learning more effective for everyone by understanding the testing it done in the most enlightened fashion on separated from learning itself is a powerful and valuable resource for all of us want

What About the Irish Whiskey?

Oh, you’re wondering about the Irish whiskey? What part does that play? That’s what I’m going to drink two fingers of now that I finished 31 posts in 31 days the January Jolts of this blog Testing: A Personal History.

Slainte! And remember: NO Tests But For Learning!!!

I cannot quite believe that the January Jolts have come to an end. This final piece sums it all up nicely for me. I started commenting with my Course Leader for the Chartered Institute of Educational Assessor’s hat on (see https://www.herts.ac.uk/ciea/ciea-qualifications), but it became apparent after a few days that, as usual, what I was reading and writing applied across my professional assessment life – to my paid work, yes, but also to my volunteering as a primary school governor, and to my life as a doctoral student (see my research profile at: https://essl.leeds.ac.uk/education/pgr/1578/marianne-talbot). If only assessment had the higher profile in teacher education that it deserved. If only formative assessment was better appreciated and employed more frequently for judicious purposes. If only those designing summative assessment better understood the end-to-end process (and few of us do, myself included. And when I say end-to-end, I am talking about a very long timescale and very broad context; this is probably entirely unrealistic, but I do think we, collectively, could do better). If only I can play my part in improving the ‘system’ I am very much part of. At the very least, I can ask questions and be provocative, a la T.J.

Thanks for enhancing the conversation – I look forward to continuing it.

Ah, Marianne. Such wise and warm words yet again. I will miss the daily connection but look forward very much to staying in touch. Thanks again.