Double digits now! This is the tenth post of the thirty-one promised January Jolts for Testing: A Personal History.

For new readers — and if LinkedIn stats are valid — we have many of those — here is an explanation for why this blog exists:

Having been thrust accidentally into the world of testing 20 years ago and then spending most of those ensuing two decades as Chief Learning Officer at ETS…

- I can’t stop thinking about testing. Habits are hard to break.

- I can’t stop collecting links, articles, books on the subject.

- I find it well-nigh impossible to avoid daily rabbit holes on the subjects of genetics and intelligence, measuring success, anti-testing movements, and so much else in this domain. And it’s a big domain because it’s connected to education.

- There is another reason that I took on this strand of blogging, which is quite different from what I’ve been doing for the past three years as a playwright over at Knowledge Workings: I fear that we might lose an opportunity to do the best kind of testing, cognitively based assessment of, by, and for learning (CBAL). And we need that different type of assessment very much right now.

Strong recent sentiments and even actions against standardized testing constitute a source of that fear in #4. However, the the inertia of the so-called ‘testing industry’ and the status quo modality of the entire educational establishment around assessment add to the concern. Their inability to change even in the face of evidence that change is necessary feeds my fear of losing the opportunity to shift almost all of our testing to formative assessment. That inertia leads us at every level — state and district, elementary, high school, and college — to have the same tests for the most part over and over again. And that testing regimen imposes a rent upon our lives that is payable not just in the monies to construct the tests, but also in the scarce resources of attention and time that would be needed to make the shift. This inertia delays the implementation of CBAL because the legacy system of testing eats up all of the available resources, which were already scant compared to many other educational initiatives. If you care about your family and you care about this country, then you should care about education. If you care about education, and I recognize this is not a sentiment shared by all, then you should care about having the most valid, reliable, fair, and formative assessments available to help everyone develop to their highest potential.

Now you know again why I created the blog Testing: a Personal History.

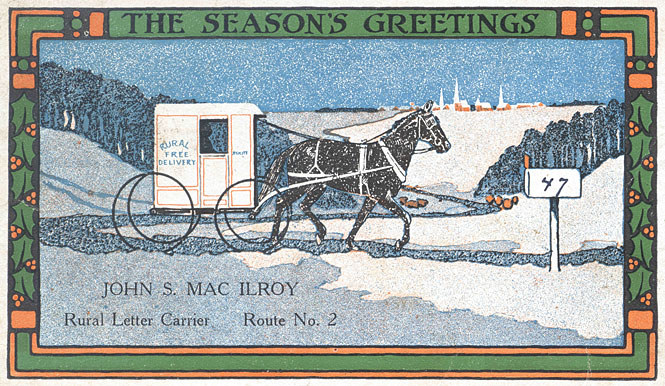

Of course, I hoped that the narrative would NOT just be my thoughts on the subject, but rather a an agglomeration of opinions. Hence, my designation of Mailbox Monday, the post where I take a look at those other opinions. There have been some interesting ones, but one correspondent stands out far above the rest. Marianne Talbot is a savior: how lucky am I to have a willing participant in this dialogue? She comments on every post and in this comment, she gathers not one but two examples of tests which readers have experienced that by dint of their design or administration seemed incapable of delivering a valid score. Here is that comment

. I do, however, think that test developers should be able to justify their choices, explaining why they are the ‘best’ or perhaps ‘fairest’ manifestation for that assessment – why that type or mode of assessment, why those specific questions/format, why that order or sequence, why are those answers most appropriate (valid)? For example, an A level paper might have three assessments: a two-hour written paper which is a combination of a section of multiple-choice questions and another section of short-answer questions, a 90-minute practical test, and another two-hour written exam where candidates must write two essays in response to two of six possible questions. I hasten to add that I have made this example up, but it is not untypical. Why use this combination of assessment formats, question types, response frameworks? It’s probably not perfect, but by combining a range of formats etc, does it make the overall assessment fairer, maybe more balanced? Or is it just the least-worst option?! That’s potentially quite a long way from perfect!”

Brilliant! In a subsequent comment, Marianne extended the concerns above : “ I am fascinated by the general lack of understanding (or even interest) of validity in relation to assessment – in the general public mainly, but also sometimes in the teaching profession (including university lecturers – wouldn’t you think assessment professionals would know better/be curious?)”

This concern gets at one of our key questions: how good is the assessment literacy and execution by teachers at every level of our educational system? to re-quote Marianne : the questions of ‘ why that type of mode of assessment, why those specific questions/format , why that order or sequence, ‘ why a particular interpretation of validity ‘ remains unanswered for the most part often go unasked in assessment of learning.

Serendipitously, a form of mailbag entry — the presence in my feed of a post and link from Karen Riedeburg, another person that I got to know at ETS, raises the same question in an even broader way via this post. Karen considers a conclusion of the article she links in the post: “And I also wonder about the assertion, which seems commonplace and unquestioned (not just in this article) that one feature of ‘the structures and systems of higher education’ is that ‘faculty members are given few incentives for, if not actively discouraged from, improving their teaching.’”

The article by Beth McMurtrie raises the alarm that “teaching reformers argue, the dangers of ignoring the expanding body of knowledge about teaching and learning are ever more apparent.” Included in that expanding body of knowledge and even alluded to in the example about active learning at the beginning of the article is assessment literacy, or even just belief that assessment of learning is possible.

One of the authors that McMurtrie cites in the article is Sarah Rose Cavanagh, author of Spark of Learning. Cavanagh finds a divide [among educators that] often comes down to this question “Can you measure learning? If you don’t believe you can, in a quantitative way, Cavanagh says, “then you’re never going to believe a research study that shows pedagogical technique XYZ boosted exam scores.””

And you’re not going to be concerned about the validity of any assessment that you might do because maybe you don’t think that it matters. That’s a big mistake. Not for the usual reason that people cite of tried truisms such as, “What gets measured matters, and what matters gets measured.” It’s a big mistake because the relationship between learner and teacher should be empathic, of understanding and appreciating that student’s experience of the learning encounter. I can’t think of any accepted educational methodology or theory that doesn’t require soliciting and using feedback from the learner. But relying upon their own self-report may prove insufficient. We have opportunities now not only to test in such a way that a score (or set of related scores) allows us to understand aspects of learning but also to be as McMurtrie notes to be “mining the data that can be found in learning-management systems and institutional research offices to ask very specific questions, such as: How does the amount of time a student spends watching video lessons or doing online reading correlate to grades.” We should want such methods and measures that are valid to tell us multidimensionally about the learning experience of each of our students.

Speaking of validity, I can’t resist letting Marianne have the last word via her latest comment but in her generous way she gives ‘ the mic’ over to my friend and former colleague Michael Kane in discussing validity:

“…I believe good assessment needs to consider validity at all levels of granularity – what might appear to be valid at one level really might not be at another level. See Kane’s work on item design and test design/review of the field – I would add qualification design as an additional layer to be considered. In “Current Concerns in Validity Theory” (2001; available at this link), Kane articulates validity as follows:

‘Validity is not a property of the test or of the test scores, but rather an evaluation of the overall plausibility of a proposed interpretation or use of test scores […that…] reflects the adequacy and appropriateness of the interpretation and the degree to which the interpretation is adequately supported by appropriate evidence’.

Furthermore, he says:

‘Validation includes the evaluation of the consequences of test uses […and…] inferences and any necessary assumptions are to be supported by evidence; and plausible alternative interpretations are to be examined’

But then he also says: “Validation is difficult at best, but it is essentially impossible if the proposed interpretation is left unspecified”. His concluding remarks are an excellent synopsis of how we have got to where we are…”

And where we are is where we will leave it until tomorrow’s post.

I could not agree more with your opening bullets, I find this whole arena fascinating. I am lucky enough to be working and studying in the field, so feel vindicated in visiting many rabbit holes daily. I also get frustrated by the inertia of many in the education (and perhaps especially assessment) ‘industry’, but I am also inspired by the many thoughtful and dedicated teachers I meet through my own teaching, who do ‘get it’ and want to effect real change for the better in their own assessment practice by improving their assessment literacy. The ripple effects of the impact of such individuals should not be underestimated – the energy when several of them get together (even virtually) for training is palpable. However, can the drive of the few overcome the resistance to change of the politicians and purse-string holders? After all, they went to school, so they are experts in education…

Thank you so much for acknowledging and using my comments – very happy to share and see what others think too. Like you, I cannot claim to have all the answers but I am eager to engage with the discussion and learn from others.