Many friends looking at my work history of well over 50 years perceive a kind of crazy quilt (is that too outdated a metaphor?) of careers. While there were many different jobs ranging from girls basketball coach to reform school teacher to alcoholism counselor to actor and playwright to barely competent bartender to psychiatric hospital executive to airline employee relations guy to organizational consultant and finally Chief Learning Officer at ETS, the common thread for me has been an interest in knowledge: its acquisition, sharing, distortion, and application. Thus, even though I spend most of my time now in the world of theater, a delightful and blessed return to what I was doing in my early 30s, I can’t quit an interest in how knowledge shapes our lives.

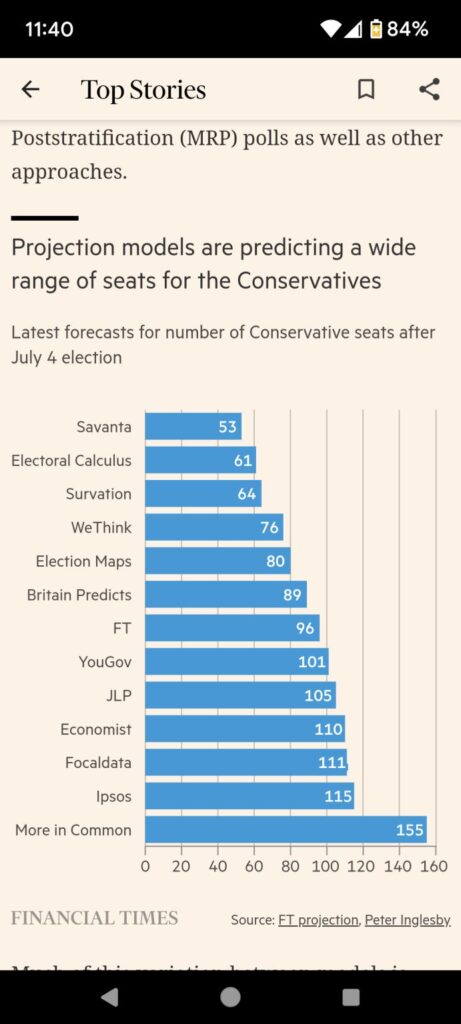

One of the forms of knowledge that is most powerful in that way is the poll. These results especially as they relate to political matters cannot be avoided by anyone who follows the news media. And there are very good data scientists running polls. However, this desire to predict what is next does not produce flawless knowledge. Take a look at the image in this post: it’s a screenshot from the Financial Times of the predictions as to how many seats the conservative party would win in last week’s election. The estimates range from a low of 53 to a high of 155. The actual total was 121 seats. Three pollsters came within 10% of that number, but underestimated the total. Five of those polls underestimated by 34%.

My point here is that polls are now accepted as knowledge, but often without the qualifications that not all polls are the same and occasionally polls are very wrong. They are knowledge in the sense that they represent a particular interpretation of information received as data was gathered from a set of people at a particular time. But they are not knowledge of the highest quality; that is they don’t represent reality with great accuracy the way other measurements do.

As the Pew Research Center noted, “The real margin of error (in political polls) is often about double the one reported. The notion that a typical margin of error is plus or -3 percentage points leads people to think that polls are more precise than they really are.” Yet they can become self-fulfilling false prophecies.

I’m not against polling, but the way in which the results are used illustrates one of the dangers of misunderstanding measurement. Nate Silver in the immediate aftermath of the 2020 presidential election admitted the limitations of polls, but suggested that they still added value to our considerations politically. In answering the rhetorical question of whether polls were becoming less accurate over time, Nate claimed that “The answer is basically no, although it depends on what cycle you start measuring from and how your expectations around polls are set.” The latter part of that answer highlights the problem for me: most citizens lack the knowledge of how statistics and measurement work to have any expectation other than a poll predicting a result accurately. E.J. Dionne and in their introduction to a Brookings Institution issue on the good bad and ugly of polling state that “But it is precisely because of our respect for polling that we are disturbed by many things done in its name..” Yet these analyses failed to take a look at the effect of bad polling upon public life. James Rosen in a Boston Globe article this year concluded that “the problem with polls might have more to do with our outsized expectations of them than the surveys themselves.” But this course offered by the State University of New York gets at the perhaps unintended effects of polling: “Elections are the events on which opinion polls have the greatest measured effect. Public opinion polls do more than show how we feel on issues or project who might win an election. The media use public opinion polls to decide which candidates are ahead of the others and therefore of interest to voters and worthy of interview.” As SOPHIA A. MCCLENNEN wrote sagely but futilely (so far) two years ago in Salon that we should stop obsessing about polls: “(Current polling behavior) is bad for a functioning democracy. Focusing on who will win takes attention away from why a candidate should or shouldn’t win, what they stand for and what policies they may advocate. When polls suggest clear winners and losers, they eliminate the role citizens play in the process by suggesting their role as voters is unnecessary. Turning everything into a game that can be predicted eclipses the fact that democracies require ongoing engagement, public debate and responsive institutions..”

Exactly. Paying attention to the lower quality knowledge that is a poll — a fact acknowledged by the best in that profession themselves — leaves less room for higher quality knowledge: what kind of capability has a candidate demonstrated, what plans do they articulate and how well do they do so, what kind of character have their past actions shown. That’s the knowledge we need and the focus on polls may make us blind to those more important elements.